Overview and status of a project to make MIDI contra dance music.

November 24, 2004

GOALS AND MOTIVATIONS

This project started with a need for music for square dance demos

by non-callers. I thought I could introduce musical features which

would make phrasing obvious and also cue where the calls were to be

made. The problem of making music for small contradances is similar.

Small dance groups do not always have access to the traditional live

music for dancing. The alternative, recorded music, has limitations

in the length of the tunes, and possible legal issues with respect to

copyrights and performance rights. This project is an attempt to make

customizable dance music (with respect to length, tempo, variety, etc.)

available without legal issues concerning music or software. We start

with traditional (public domain) melodies and add a rhythmic backup

to transform it into something danceable. Variety is achieved with

varied instrumentation and dynamics. Control over emphasis allows one

to make beats and phrasing more obvious to beginning dancers and callers.

The software (VAMP) is free (GPL) and runs under Linux and Windows

(Mac OS X should work, but has not yet been tested). MIDI files can

be played "live" or converted into WAV files and recorded on CD's.

CHALLENGES: SOLVED AND REMAINING PROBLEMS

From a simple program which reads music in "ABC" notation and produces

a MIDI file with the correct notes (pitch and duration), we have gone

on to consider how to assign chords to a melody, what dynamic and chordal

tricks are needed to mark the typical 8 beat phrases, and have

discovered some limitations inherent in MIDI players.

MIDI files lack a certain portability because the translation of

"velocity" to loudness is not standardized. The MIDI to WAV converter

that I use in Linux ( Timidity++, also available on other platforms), has

several options, including the logarithmic-exponential one most congenial

to adding dynamic effects, but the Windows Mediaplayer uses a quadratic

function. The standard amplitude envelope works well for percussive

instruments such as piano or guitar, but not so well for bowed strings.

Current issues under consideration include modifying fiddle sounds

by slurs and accents (or a set of "riffs" used like chords, but for

dynamics). Some local variation in note duration and synchronization

(rubato) may also add to the naturalness of the sound.

All through this endeavor there has been the problem of making someone

else's musical intuition explicit enough to extract quantitative MIDI

parameters to give to the computer. This is the classic "expert systems"

problem. It has been approached iteratively, making models and

soliciting feedback from a number of musicians, without whose help and

patience this project would have been much harder and much less successful.

CONCEPTUALIZATION

Music has a number of aspects (pitch, rhythm, volume, harmony) which

can be abstracted from the totality of the sound. VAMP is an experiment

to see what sort of music one can reconstruct from merging abstracted

time sequences; melody (pitch and duration), chords (harmony and beat),

accent and phrase (periodic variations in the loudness of notes), as

played by selected instruments.

If one thinks of a score as time aligned music with a separate

staff line for each voice/instrument (two staffs for a piano), then

what VAMP does is to split each voice line into two: the pitch and

duration sequence of melody or harmony, and the loudness variations

as specified by accent or phrasing. One could even consider melody

as a pitch contour in time which is sampled at discrete instants.

At first consideration, this idea is problematic because a change in pitch

normally signals a new note (when notes are thought of having specific,

separated pitches). So either think of a continuously changing pitch in

time and use the standard pitch nearest the frequency when sampled, or

alternatively, consider stepwise pitch changes at the fastest rate

(say, 1/16 notes) and sample less frequently. I have done this by

hand occasionally in making extra parts for accompanying instruments

(e.g. dulcimer playing 1/8 notes from a mostly 1/16 note fiddle part).

Because of the repetitive nature of contra dance music, it lends itself

to being thought of as larger chunks of sound which are replayed with

variations. This and ease of programming (and data entry) led to a text

based user interface rather than the graphical interfaces in which one can

(and must) tweak individual notes. I also wanted to investigate the effects

of keeping certain aspects constant (say the melody) and changing chords,

or changing the dynamics or instrumentation.

A programmer views repeats as loops, in this case synchronized and with

different time spans/granularity:

-- oscillations (the musical samples, envelopes)

-- notes (pitch, duration, loudness)

-- phrases (hypermeter)

-- repeats (loudness, which instruments)

-- choice of tunes and medleys

-- composition of the band (possible timbres).

VAMP tends to treat these heirachically, and to focus on those in the middle

(notes, phrases, repeats, choice of instruments).

BACKUP AND HYPERMETER

Underlying and providing the rhythmic framework for a dance melody are

the chords. Dance music uses a basic chord framework with a strong octave

unison on the beats and a less emphasized triad chord on the offbeat.

This pattern is called a "vamp" by musicians (ref: Peter Barnes,

'Interview with a Vamper'). Moreover, the unisons on the beat have an

accent pattern which repeats over a phrase (usually 8 beats); this is

called a hypermeter to distinguish it from meter derived accent patterns

within a measure (usually 2 beats). We have found that using a distinguishing

chord pattern on the last beat of a phrase can suggest a breath pause

without actually breaking the rhythm. Other special chord patterns

can be used to emphasize dance figures such as balances; providing

examples of these is still to be done.

THE SOUND OF A FIDDLE

A straight forward translation of pitches and durations into MIDI commands

produces uninspired, "computerized" music. Even though samples of different

instruments are used for the sounds, there is typically a single model

for the shape or envelope of each note. This is the A(H)DSR (attack (hold)

decay sustain release) shape with a relatively fast rise in amplitude of

the sound and a much slower decay. The shape parameters usually are defined

in the sound sample itself and cannot easily be modified dynamically during

playback. Such a model represents percussive instruments such as pianos,

guitars, and drums quite well, but does not do a good job on bowed strings.

A fiddle is one of the traditional instruments heard in contra dance

music. Initial reactions characterized the sound as more like a calliope

than a violin, even though several different sound samples were tried. MIDI

Fiddle style for contra music tends to separate notes rather than run them

together, so we tried adding some staccato (maybe 1/3 of a 1/16 note)

which adds a bit to the realism MIDI

Some sound patches or sound font samples worked better than others, but

nothing was very convincing. I even tried to make a GUS patch with samples

for almost every note using samples obtained from the University of Iowa

Electronic Music Studio; that didn't improve things much, but I may have

had to use vibrato.

Then I looked at the wave envelopes of a professionally played scale,

and tried the experiment of making isolated sound samples from the scale

by chopping out the transitions between notes. The original scale sounded

like a violin, but the isolated approximately constant amplitude samples

sounded like the MIDI music. Upon further consideration, one realizes that

the MIDI sounds were generated with an ADSR envelope and were independent,

so that each note could decay into the attack of the next. That doesn't

happen with notes played on the same string, and there is also a pitch

bend effect with notes played on the same string with continuous bowing.

About this time I learned of 'shuffle bowing' and other fiddle riffs, where

one plays with a combination of slurs and staccato, with a characteristic

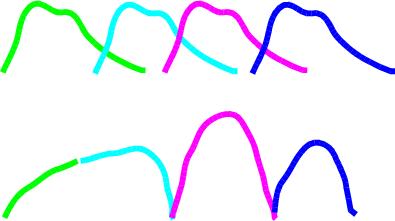

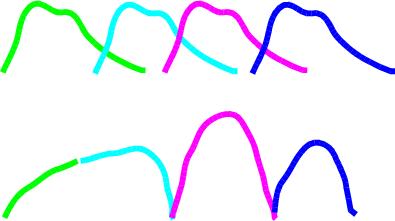

accent pattern for each beat. This sketch contrasts the time and amplitude

dependence for independent ADSR shaped notes with my first model for a

shuffle bowed fiddle:

A short synthesized sequence using a four

note shuffle bowing pattern: slur 1 and 2, accent 3 (staccato) and play

4 staccato was generated with a sawtooth waveform. The rise and fall of

the start and end of the slur and of each staccato note was a parabola,

and some noise was added to the start of the notes to simulate bowing.

This experiment sounds better than any previous attempts. WAV

VAMP was then modified to keep track of slurs and ties, and to use

that information to pitch bend between the notes involved without

having any NOTE_ON or NOTE_OFF messages (and hence no independent notes

with tails decaying into the next). Moreover, the decay of the end of the

slur and of the staccato notes was shaped using polyphonic aftertouch so

that the amplitude was almost zero by the time the next note started.

This reproduced the sawtooth synthesis experiment, but applied to general

ABC melodies. It produces a fairly convincing result, controllable by

several paramters (rate of slur, time to mute the note). MIDI

There is still some effect involving the nature of the sound samples.

Also, depending on the rhythmic pattern of the melody, several different

slur patterns will be used by a fiddler, so either extensive work has

to be done to incorporate these into the melody ABC, or riff macros

should be defined and used to specify pitchbend, staccato, and accent

in a manner similar to the way chords define simultaneous notes. This

would give one the flexibility to experiment with different patterns

without laborious editing. It has also been suggested that some local

variation in timing (rubato) might add to the realism of the sound.

It becomes apparent that these measures will only suggest and not

reproduce the variety and expressiveness that a person can achieve

while playing an instrument. The purpose however is to provide a

rhythmic context for the dancers who are also occupied with

coordinating their movements with the music.

In Google searching for prior art, I have not found much that

explicitly deals with how to program envelopes to produce these effects,

but there is a lot of general discussion about legato and a fair number of

commercial high end products and samples. My focus is more on what can be

achieved with an ordinary MIDI to wave converter without using multiple

samples or parallel tracks or a graphical composing tool. The goal has

been to make accessible and acceptable dance music.

GOOGLE[MIDI aftertouch bowed strings envelope] yields a discussion of

realism in MIDI sequences

and a commercial afterprocessor called Style Enhancer .

GOOGLE[MIDI legato string] yields almost 9000 hits and several in the

initial 50 are useful and informative. However most of them involve using

multiple samples for different bowing styles and different volume levels in

the context of professional sequencing software.

A short synthesized sequence using a four

note shuffle bowing pattern: slur 1 and 2, accent 3 (staccato) and play

4 staccato was generated with a sawtooth waveform. The rise and fall of

the start and end of the slur and of each staccato note was a parabola,

and some noise was added to the start of the notes to simulate bowing.

This experiment sounds better than any previous attempts. WAV

VAMP was then modified to keep track of slurs and ties, and to use

that information to pitch bend between the notes involved without

having any NOTE_ON or NOTE_OFF messages (and hence no independent notes

with tails decaying into the next). Moreover, the decay of the end of the

slur and of the staccato notes was shaped using polyphonic aftertouch so

that the amplitude was almost zero by the time the next note started.

This reproduced the sawtooth synthesis experiment, but applied to general

ABC melodies. It produces a fairly convincing result, controllable by

several paramters (rate of slur, time to mute the note). MIDI

There is still some effect involving the nature of the sound samples.

Also, depending on the rhythmic pattern of the melody, several different

slur patterns will be used by a fiddler, so either extensive work has

to be done to incorporate these into the melody ABC, or riff macros

should be defined and used to specify pitchbend, staccato, and accent

in a manner similar to the way chords define simultaneous notes. This

would give one the flexibility to experiment with different patterns

without laborious editing. It has also been suggested that some local

variation in timing (rubato) might add to the realism of the sound.

It becomes apparent that these measures will only suggest and not

reproduce the variety and expressiveness that a person can achieve

while playing an instrument. The purpose however is to provide a

rhythmic context for the dancers who are also occupied with

coordinating their movements with the music.

In Google searching for prior art, I have not found much that

explicitly deals with how to program envelopes to produce these effects,

but there is a lot of general discussion about legato and a fair number of

commercial high end products and samples. My focus is more on what can be

achieved with an ordinary MIDI to wave converter without using multiple

samples or parallel tracks or a graphical composing tool. The goal has

been to make accessible and acceptable dance music.

GOOGLE[MIDI aftertouch bowed strings envelope] yields a discussion of

realism in MIDI sequences

and a commercial afterprocessor called Style Enhancer .

GOOGLE[MIDI legato string] yields almost 9000 hits and several in the

initial 50 are useful and informative. However most of them involve using

multiple samples for different bowing styles and different volume levels in

the context of professional sequencing software.

A short synthesized sequence using a four

note shuffle bowing pattern: slur 1 and 2, accent 3 (staccato) and play

4 staccato was generated with a sawtooth waveform. The rise and fall of

the start and end of the slur and of each staccato note was a parabola,

and some noise was added to the start of the notes to simulate bowing.

This experiment sounds better than any previous attempts. WAV

VAMP was then modified to keep track of slurs and ties, and to use

that information to pitch bend between the notes involved without

having any NOTE_ON or NOTE_OFF messages (and hence no independent notes

with tails decaying into the next). Moreover, the decay of the end of the

slur and of the staccato notes was shaped using polyphonic aftertouch so

that the amplitude was almost zero by the time the next note started.

This reproduced the sawtooth synthesis experiment, but applied to general

ABC melodies. It produces a fairly convincing result, controllable by

several paramters (rate of slur, time to mute the note). MIDI

There is still some effect involving the nature of the sound samples.

Also, depending on the rhythmic pattern of the melody, several different

slur patterns will be used by a fiddler, so either extensive work has

to be done to incorporate these into the melody ABC, or riff macros

should be defined and used to specify pitchbend, staccato, and accent

in a manner similar to the way chords define simultaneous notes. This

would give one the flexibility to experiment with different patterns

without laborious editing. It has also been suggested that some local

variation in timing (rubato) might add to the realism of the sound.

It becomes apparent that these measures will only suggest and not

reproduce the variety and expressiveness that a person can achieve

while playing an instrument. The purpose however is to provide a

rhythmic context for the dancers who are also occupied with

coordinating their movements with the music.

In Google searching for prior art, I have not found much that

explicitly deals with how to program envelopes to produce these effects,

but there is a lot of general discussion about legato and a fair number of

commercial high end products and samples. My focus is more on what can be

achieved with an ordinary MIDI to wave converter without using multiple

samples or parallel tracks or a graphical composing tool. The goal has

been to make accessible and acceptable dance music.

GOOGLE[MIDI aftertouch bowed strings envelope] yields a discussion of

realism in MIDI sequences

and a commercial afterprocessor called Style Enhancer .

GOOGLE[MIDI legato string] yields almost 9000 hits and several in the

initial 50 are useful and informative. However most of them involve using

multiple samples for different bowing styles and different volume levels in

the context of professional sequencing software.

A short synthesized sequence using a four

note shuffle bowing pattern: slur 1 and 2, accent 3 (staccato) and play

4 staccato was generated with a sawtooth waveform. The rise and fall of

the start and end of the slur and of each staccato note was a parabola,

and some noise was added to the start of the notes to simulate bowing.

This experiment sounds better than any previous attempts. WAV

VAMP was then modified to keep track of slurs and ties, and to use

that information to pitch bend between the notes involved without

having any NOTE_ON or NOTE_OFF messages (and hence no independent notes

with tails decaying into the next). Moreover, the decay of the end of the

slur and of the staccato notes was shaped using polyphonic aftertouch so

that the amplitude was almost zero by the time the next note started.

This reproduced the sawtooth synthesis experiment, but applied to general

ABC melodies. It produces a fairly convincing result, controllable by

several paramters (rate of slur, time to mute the note). MIDI

There is still some effect involving the nature of the sound samples.

Also, depending on the rhythmic pattern of the melody, several different

slur patterns will be used by a fiddler, so either extensive work has

to be done to incorporate these into the melody ABC, or riff macros

should be defined and used to specify pitchbend, staccato, and accent

in a manner similar to the way chords define simultaneous notes. This

would give one the flexibility to experiment with different patterns

without laborious editing. It has also been suggested that some local

variation in timing (rubato) might add to the realism of the sound.

It becomes apparent that these measures will only suggest and not

reproduce the variety and expressiveness that a person can achieve

while playing an instrument. The purpose however is to provide a

rhythmic context for the dancers who are also occupied with

coordinating their movements with the music.

In Google searching for prior art, I have not found much that

explicitly deals with how to program envelopes to produce these effects,

but there is a lot of general discussion about legato and a fair number of

commercial high end products and samples. My focus is more on what can be

achieved with an ordinary MIDI to wave converter without using multiple

samples or parallel tracks or a graphical composing tool. The goal has

been to make accessible and acceptable dance music.

GOOGLE[MIDI aftertouch bowed strings envelope] yields a discussion of

realism in MIDI sequences

and a commercial afterprocessor called Style Enhancer .

GOOGLE[MIDI legato string] yields almost 9000 hits and several in the

initial 50 are useful and informative. However most of them involve using

multiple samples for different bowing styles and different volume levels in

the context of professional sequencing software.